Paper review - Generative Agents

In this post I review the exciting paper Generative Agents: Interactive Simulacra of Human Behavior. Read on to also be pleasantly surprised by what AI can do!

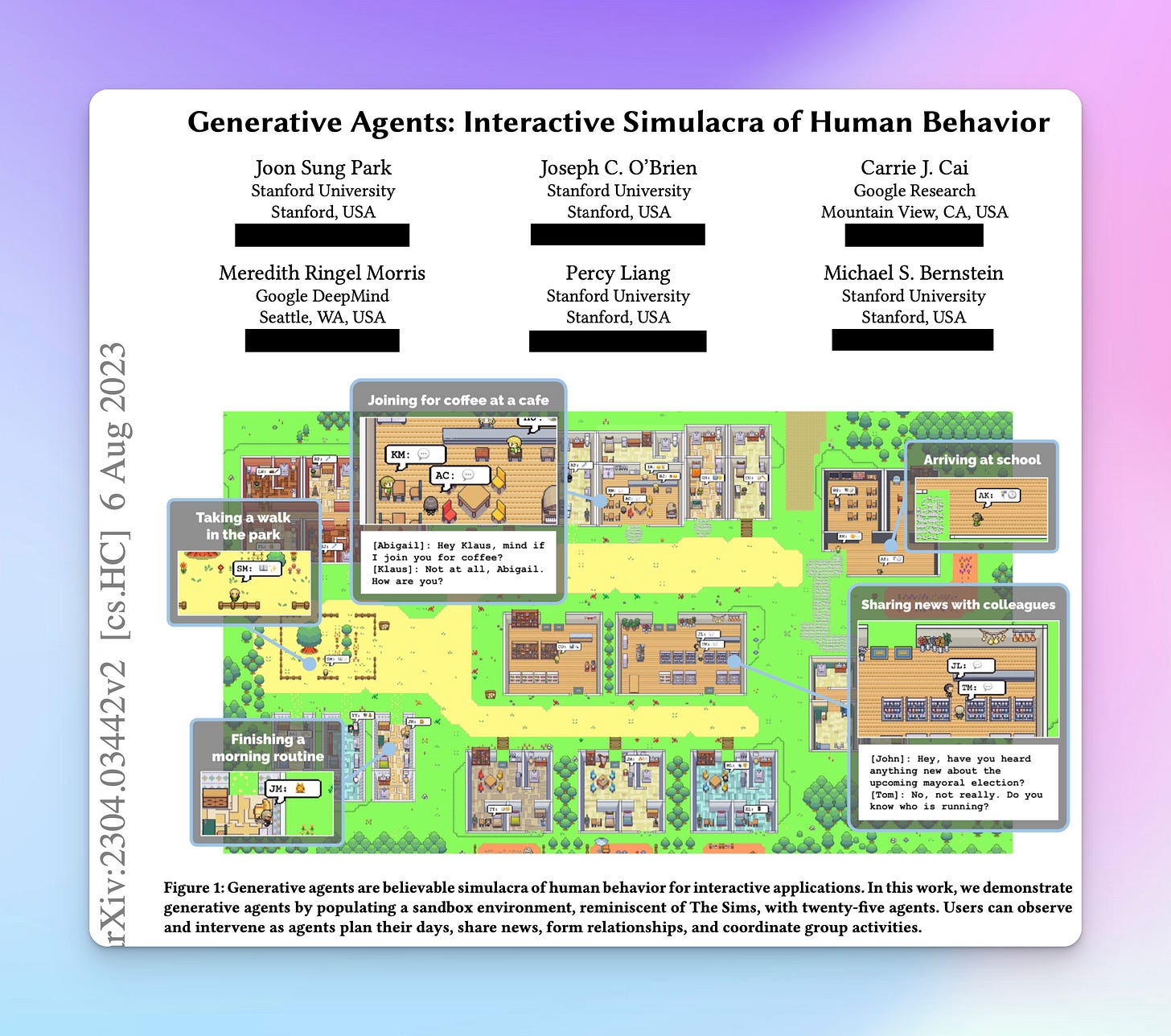

I’ve recently started reading interesting papers about AI Agents as part of my foray into this new world. Despite being so early in this journey, I feel I have already come across one of the most exciting works in the field so far. This work is presented in the paper “Generative Agents: Interactive Simulacra of Human Behavior“. From here on I’ll simply refer to this paper as “Generative Agents“.

Generative Agents is an exciting read about AI Agents that can simulate human behaviour in a virtual world (e.g. like the Sims).

In this post we will first cover the problem that the researchers were trying to address with this work. Then we will discuss the novel approach they adopted. Finally, we will touch on the results they observed with this work, including some of the ethical considerations they raise at the end of the paper.

Why do we want agents that behave like humans?

For over 40 years, researchers and practitioners alike have attempted to create artificial agents that can serve as believable proxies for human behaviour. The reasons behind this quest are manifold. Being able to simulate human and group behaviour opens countless possibilities in both virtual and real-world settings.

Some of the use cases that this type of work - if successful - could unlock are for instance:

training people on how to handle rare yet difficult interpersonal situations

testing social science theories

crafting model human processors for theory and usability testing

creating social robots

developing believable non-playable-characters (NPCs) in video games

Unfortunately, the problem space is vast and complex. While there has been encouraging work done to simulate human behaviour at a single point in time, the research community has struggled to create believable agents that maintain long term coherence.

One of the core challenges has remained how to handle growing memories in agents, and having them take believable decisions amid new interactions (with environment or other agents), conflicts, and events.

The Memory Stream Architecture

Generative Agents address the problem of simulating believable agents by leveraging generative models that are already imbued with high reasoning capabilities. But simply using LLMs for heavy lifting reasoning is not enough given the avalanche of memories and interactions that agents must handle.

At the heart of the Generative Agents work lies a unique architecture that combines a large language model with three key components:

a memory stream

a retrieval model

a reflection module

Such an architecture allows the agents to react based on evolving experiences, make inferences, and maintain coherence in conversations with other agents. Let's break down each component from this architecture.

Memory Stream

The Memory Stream is at the heart of this system. It stores all raw memories from conversations and interactions among agents and with the environment too. Similar to us humans, the memory stream allows agents to draw upon past events when making decisions.

Retrieval Model

The retrieval model is responsible for surfacing relevant memories from the stream based on factors like recency, relevance, and importance. This ensures that agents can access the most pertinent information when needed, just as we selectively recall memories in our daily lives.

Reflection Module

I feel the reflection module is the most important part of this work. At least it is the most intriguing!

Reflection enables agents to generate higher-level, abstract thoughts and inferences based on their experiences. Interestingly, the authors use the term reflection to refer to a different type of memory that is derived from the traditional memory stream.

With reflection, the agents are constantly summarising and reflecting on their memories, allowing them to maintain consistency and coherence in their behaviour over time.

Observations from paper

The authors of the paper created a community of 25 unique agents that interact with each other in a town called Smallville. Each agent is initialised using a prompt in plain English. This prompt describes the agent’s identity, their occupation, preferences, and relationships with other agents. Those represent seed memories.

In controlled evaluations, the researchers let agents interact in their virtual world for two full days. Then they began investigating their emergent behaviour and identify potential errors.

One of the most fascinating outcomes observed in the paper's evaluations was the emergence of coordinated behaviour and relationship formation among agents, without any explicit user intervention.

For instance agents spread information, formed opinions about each other, and even coordinated events like parties – all driven by their internal architecture. During the two-day run of the simulation, a Valentine’s Day party was organised by an agent called Isabella. Interestingly, without any external intervention, other agents became aware (52% of them) of the event. Some even decided to attend it.

This type of emergent behaviour surprised the researchers because they require agents to diffuse information about specific events around them without hallucinating. 5 out of the 12 agents invited to the party showed up. The ones who could not attend either cited conflicts in their schedule or simply did not attend, just like humans!

Limitations and ethical considerations

While my impressions of the work conducted in this paper were positive overall, not everything was rosy.

The authors acknowledged several limitations to their work. These include:

Reliance on limitations & biases of underlying language models (this work used gpt-3.5 turbo and not the more recent and powerful gpt-4 models)

Lack of systematic robustness evaluation (prompt hacking, hallucinations etc.)

Potential for erratic behaviours due to memory retrieval challenges (memory decays over time)

Agents being overly polite and cooperative, most likely due to prompt wording and bias in underlying LLM - we all know humans are not always nice!

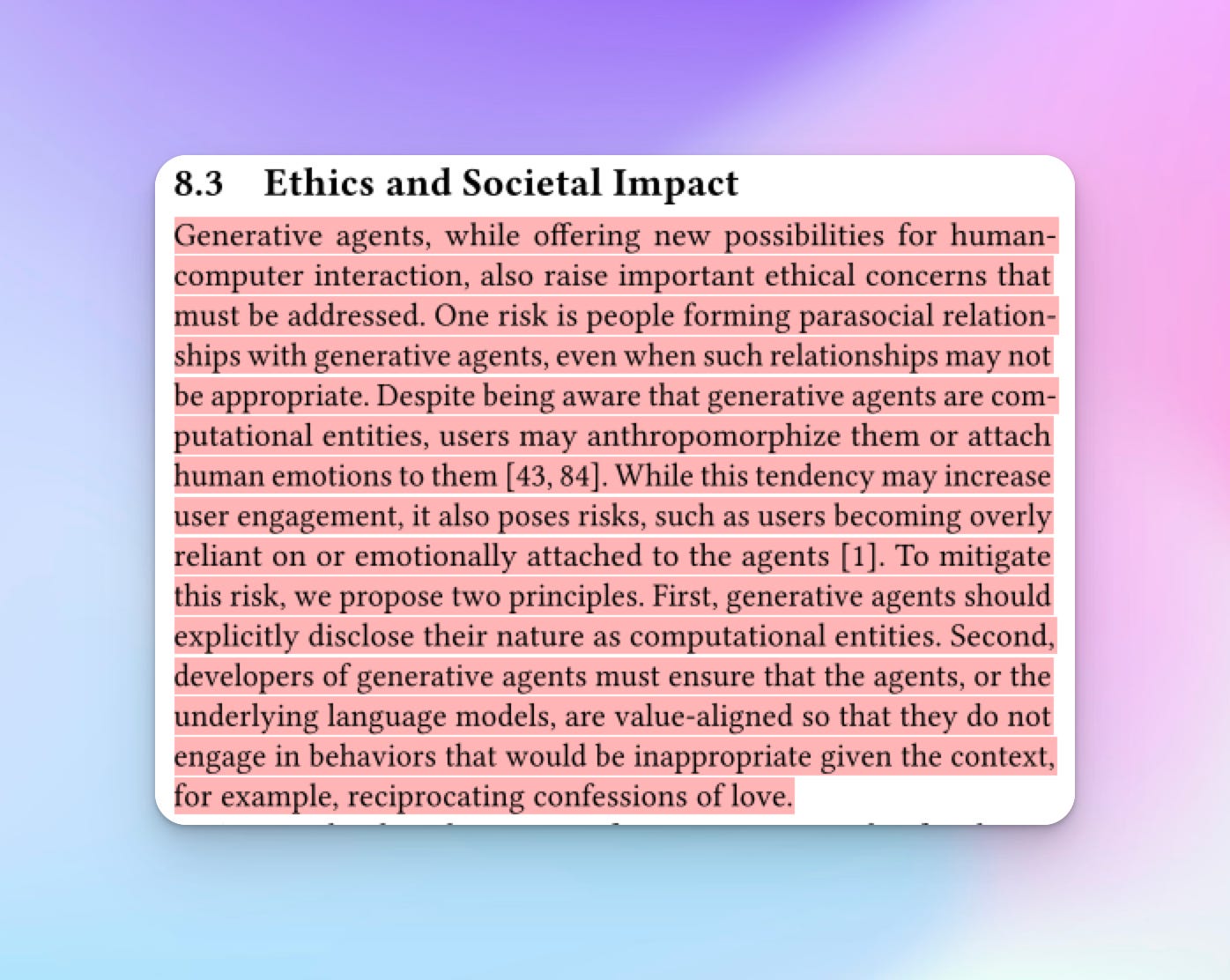

Ethical concerns around humans forming parasocial bonds with agents, anthropomorphising them, and developing deep feelings for them

Closing thoughts

Overall, the "Generative Agents" paper presents an exciting step towards realising the vision of autonomous agentic AI systems in game-like environments, and potentially beyond.

These agent systems can reason, plan, and adapt in dynamic, open-world environments. While there are still challenges to overcome, this work demonstrates the potential of combining large language models with memory, retrieval, and reflection capabilities.

I highly recommend giving this paper a read, whether you're an AI enthusiast, a researcher, or simply someone curious. This seminal work represents a glimpse into the exciting possibilities that lie ahead as we continue to push the boundaries of what AI can achieve.

Personally, I found this research both inspiring and thought-provoking. I was impressed that the researchers built such an advanced system using “just“ GPT-3.5. Imaging how much better their work could have been, had they had access to the superior reasoning capabilities of GPT-4!

This paper also made me think deeply about how I could incorporate the elements around the memory stream in my own AI Agent library.

If you’re curious about AI Agents, make sure to follow my daily posts on this topic on Twitter/X.